Overview

Teams aiming to improve quality or safety in a health system often look to tools within the electronic health record (EHR) as key to driving their efforts. However, the relationship between a team’s ability to drive quality and the organization’s ability to monitor the effectiveness of those interventions is less discussed. This study will investigate how the maturity of managing EHR interventions like alerts and order sets (i.e. clinical decision support) can enhance health systems’ abilities to conduct Plan-Do-Study-Act (PDSA) cycles of improvement.

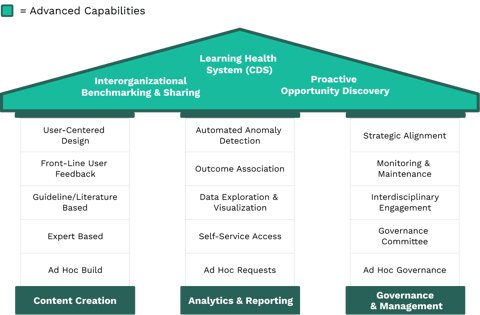

The CDS Maturity Model

Background on the CDS Maturity Model

A study published in the Journal of Applied Clinical Informatics, 'Towards a Maturity Model for Clinical Decision Support Operations', serves as the framework for analyzing a PDSA cycle.1 The research is based on interviews with clinical, informatics, and quality leaders at 80 organizations and defines three key pillars necessary in evaluating a health system’s CDS operations:

- Content Creation - Ensure guidelines are evidence-based

- Analytics & Reporting - Evaluate performance

- Governance & Management - Manage stakeholder involvement

Application to PDSA

In the following sections, we’ll look at elements from this model (bolded below) and see how mature EHR operations can enhance your efforts at every stage of the PDSA cycle.

Plan

INCORPORATE APPROPRIATE EXPERTISE

One of the first steps in planning an improvement initiative is to ensure the right evidence-based knowledge is identified and implemented. There are many levels of evidence that exist in clinical medicine, but improvement teams should always aim for those based on Guidelines / Literature rather than Expert-Based consensus. Although it might sound straightforward to weave knowledge into new clinical processes, the diverse array of priorities and accelerating rate of medical knowledge makes it operationally challenging for health systems to keep their interventions appropriately aligned.

DRIVE STAKEHOLDER ALIGNMENT

Even when teams design a new workflow with a well-established base of evidence, clinicians might resist these initiatives when they perceive workflow changes may cause unintended negative consequences. Thus, a key to planning is driving broad Stakeholder Alignment which increases the speed of implementing initiatives by removing barriers due to change management. Moreover, by routinely engaging these stakeholders early, one can incorporate more knowledge of the workflow or subject matter expertise to enhance the outcomes. While many stakeholders might be formally recognized for their role in certain initiatives, the ability of a project team to engage in Data Exploration & Visualization enables the team to identify frequent users of order sets, for example, who might not have been formally designated to help with the initiative.

Do

Advanced institutions are investing in collecting asynchronous feedback at the point of care which are available for data exploration and visualization by all teams.

EMPATHIZING WITH THE END USER

After the planning stage, it’s important to test the plan at a small scale.2 One way of testing an intervention is through Front-Line User Feedback, which can be automated or manual. Another is to gather further insight into the human-computer interaction via User-centered Design. Although the spirit of the CDS Maturity Model is to be able to do these practices consistently, one clinician took an ad hoc approach to User-centered Design to improve a venous thromboembolism (VTE) alert. Although the improvement and informatics teams agreed on the wording of the VTE alert, they saw subpar performance through their Data Exploration & Visualization. The improvement team used alert firing analytics to identify resident physicians as the most impacted end-users. Next, the team surveyed a small group of residents to ask about their interpretation of the alert. Surprisingly, the surveyed residents reported that they misunderstood the language in the alert to imply that the patient in question did not require any prophylaxis. This was the exact opposite of the alert’s intention! After iterating on the language based on this feedback, the improvement team saw a significant improvement to the adherence to this VTE alert intervention. Not only does this example highlight enhancements to an intervention that can come from systematizing Front-Line User Feedback and User-centered Design, but it illustrates that those capabilities help eliminate unintended consequences of interventions at scale.

Study

INCREASING DATA ACCESS

Many will recognize the Analytics & Reporting Pillar as paramount in the “Study” stage of a PDSA cycle. At low maturity levels, Ad Hoc Requests for data are critical in understanding an intervention’s performance to drive further modifications. Yet many organizations have scarce analyst time to drive and sustain the reporting for these initiatives. It’s not uncommon for reporting queues to be backlogged over 6 months! This fact underscores the importance of increasing operational maturity by decentralizing improvement projects via Self-Service Access and Data Exploration & Visualization tools. Maturing in this pillar delivers meaningful insights and removes data access bottlenecks that can slow and, oftentimes, prevent improvement initiatives.

The Lab Order Reduction project highlights the benefit of empowering a Lab Tech to monitor her own improvement initiative. Not only was she able to drive an entire PDSA cycle herself, but her ability to engage in Data Exploration & Visualization dramatically reduced the turnaround times for important data. Similarly, a health system’s Hepatitis C screening initiative in the ED enabled the team to easily identify nurses’ responses to their alert. By studying these responses, the team was able to identify and educate the nurses who could improve most.

Act

FACILITATE SUSTAINMENT

Over the course of an initiative, PDSA cycles become shorter as initiatives move into a sustainment phase. Many teams lose momentum over time as unrelated priorities emerge and it becomes increasingly difficult to identify further iterations. This underscores the importance of both Monitoring & Maintenance and Anomaly Detection.

Effective governance processes (Monitoring & Maintenance) for EHR interventions help identify improvements and prevent deviation from a project’s goals. Establishing bodies that are consistently reviewing the performance of alerts, order sets, or other interventions helps ensure deviations can be tracked and modifications made. Though not ideal, the governing bodies that monitor past interventions have less time to monitor them as new priorities emerge. However, this highlights the need for Anomaly Detection of broken interventions like alerts that fire inappropriately. This capability allows resources to be redistributed to new projects while ensuring older initiatives are effectively sustained.

How to apply this to your organization

For action-oriented quality, IT, informatics, or other healthcare improvement professionals, the Maturity Model might highlight a seemingly overwhelming number of potential improvements to make. However, the Maturity Model is more valuable when used to prioritize the next capability to improve instead of recognizing all capability gaps.

We recommend a three step process for health system leaders to consider which EHR operations capabilities will be most beneficial for their systems’ quality processes.

-

Gather key quality and clinical stakeholders to align on improvement opportunities for PDSA processes. For example, there might be consensus that teams feel they aren’t getting timely insight during the Study phase.

-

Engage informatics and IT resources to understand the current state of EHR operations. For example, they could identify that their improvement teams do not have enough distributed, Self-Service Access to EHR tools which might be causing data bottlenecks.

-

Prioritize which capabilities will be most impactful for your PDSA cycles. Leaders will need to weigh projects according to their organization’s own goals across efficiency, effectiveness, or engagement. The CDS Maturity Model can become a shared vernacular across technical, clinical, and operational stakeholders and offer a set of tactics to continue to hone quality improvement through the EHR.

DIVE INTO THE CASE STUDIES

References

1. Orenstein EW, Muthu N, Weitkamp AO, Ferro DF, Zeidlhack MD, Slagle J, Shelov E, Tobias MC. Towards a Maturity Model for Clinical Decision Support Operations. Appl Clin Inform. 2019;10(5):810-819. doi:10.1055/s-0039-1697905

2. IHI.org. 2020. Science Of Improvement: Testing Changes | IHI - Institute For Healthcare Improvement. [online] Available at: http://www.ihi.org/resources/Pages/HowtoImprove/ScienceofImprovementTestingChanges.aspx [Accessed August 2020].

Check out more content

Next: Do interruptive alerts in the EHR work?

Previous: Identify Quality and Safety Gaps Via User Feedback