Key Takeaways:

- 27% of all active alerts in the study were classified as having a “malfunction”

- Using a list of “cranky” words to filter through alert feedback can effectively prioritize alerts that may be broken

- Monitoring alert feedback comments is essential for identifying “malfunctioning” alerts that may not have variation in firing rates

INTRODUCTION

It’s been established that clinical decision support (CDS) interventions can break. In the case of electronic health record (EHR) alerts, these implementations can malfunction for a variety of reasons and lead to overfiring or underfiring. We’ll also see that interventions can be broken without having any change in their firing patterns. With hundreds of deployed interventions covering a variety of clinical domains, it can be difficult to monitor and identify CDS malfunctions. An organization might rely on end-user complaints for dramatic acute shifts in firings, but this approach is not reliable and may not capture more nuanced opportunities.

In their paper “Cranky comments: detecting clinical decision support malfunctions through free-text override reasons”, Aaron et al analyzed communication from end-users delivered as part of a feedback mechanism within the EHR. By using this asynchronous feedback, the researchers were able to investigate the scale of alert malfunctions at the organization as well as opportunities for improvement.

APPROACH

The investigators studied their custom-built alerts within their Epic EHR system at Partners Healthcare. These alerts, called Best Practice Advisories (BPAs) in Epic, allow users to leave free-text comments when asked to take an action. The authors filtered through the feedback from all alerts with at least 10 comments in the two year study period. The alerts were then classified as: “broken” (at least 1 trigger that was inconsistent with intended logic), “not broken, but could be improved” (not “broken”, but alert didn’t use all available data), or “not broken”. For the purposes of this study, a “malfunction” is defined as an alert that is either “broken” or “not broken, but could be improved”. After having identified “malfunctioning” alerts, the authors aimed to see if feedback comments could help surface these problems. Given the number of alerts and comments, different ranking methods were evaluated. The three approaches considered were:

- Frequency of feedback comments

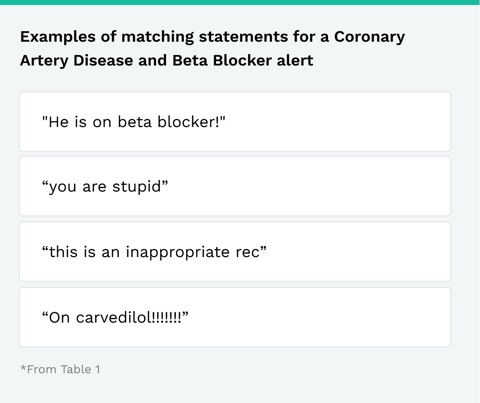

- A master list of words to filter the feedback comments against (“Cranky Comments Heuristic”; list in Supplementary Appendix B)

- A “predictive” algorithm that identifies possible malfunctions based on a sample of comments annotated by a researcher (Naïve Bayes classifier)

For the “Cranky Comments Heuristic”, the following ratio was used: (Number of alert comments matching the master list) / (Total number of alert comments)

RESULTS

The authors identified a total of 120 alerts which met the inclusion criteria of at least 10 comments. Of these, 75 (62.5%) of the included alerts were classified as having a “malfunction”. When including active alerts with less than 10 comments, these “malfunctioning” alerts represent 27% of all alerts in the EHR.

When they analyzed the three ranking approaches, the authors found the optimal choice to be the Naïve Bayes classifier (AUC 0.738). The “Cranky Comments Heuristic” performed almost as well (AUC 0.723). Just looking at the frequency of comments performed poorly (AUC 0.487), similar to randomly ranking.

The authors note that the “Cranky Comments Heuristic” took significantly less work to implement and performed similarly to the Naïve Bayes classifier.

DISCUSSION

The finding that 27% of custom alerts in the EHR have a “malfunction” is not only remarkable, but worrisome. The authors state that this finding is “much higher than expected and unacceptable from a safety perspective.” It’s important to note that a “malfunction” doesn’t necessarily mean it stopped firing altogether or that it suddenly bombarded end-users. However, even the broad definition of “malfunction” in this study points to a significant need for further monitoring and maintenance of CDS. The “malfunctions” in this evaluation represent incorrectly targeted clinicians and inappropriate triggering criteria that contribute to alarm fatigue and clinician burnout.

Furthermore, CDS should only be implemented to drive a downstream outcome. As part of the roll out, the intervention is often only planned, built, and deployed. Those with quality improvement experience will recognize this is a premature conclusion to an evaluation cycle. Using the Plan-Do-Study-Act (PDSA) framework, the next step to any intervention implementation is to look at the data in order to assess improvement (or detrimental impacts). In fact, our published CDS Maturity Model explicitly calls out “Front-line user feedback” as a level within “Content Creation”. The “cranky” comments study provides a rich avenue for ideas to launch iterative improvements that lead to desired clinical outcomes.

Comment collection using Phrase Health

EVALUATING YOUR CDS USING THESE METHODS

Phrase Health facilitates the collection of direct user feedback from the EHR system with a link that can be embedded in CDS, in addition to monitoring of Alert Actions Taken and Override rates. By combining this feedback with other analytics and management tools, teams can effectively iterate on interventions to drive improvement in clinical care while decreasing the burden on clinicians.

Journal Club Source

Aaron, Skye, et al. “Cranky comments: detecting clinical decision support malfunctions through free-text override reasons .” Journal of the American Medical Informatics Association, vol. 26, no. 1, 2018, pp. 37–43., doi:10.1093/jamia/ocy139.

Check out more content

Next: EHR Operations and More Effective PDSA Cycles

Previous: Phrase Health Awarded NIH Grant To Align Electronic Health Record Data With Quality Outcomes